What are Power BI Dataflows?

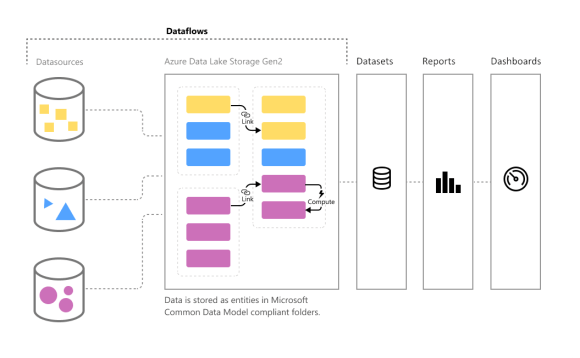

Power BI Dataflows are a cloud-based ETL (Extract, Transform, Load) service provided by Microsoft Power BI. They allow users to easily create and manage data pipelines, which can be used to extract data from various sources, transform and clean the data, and then load it into a destination where it can be consumed by Power BI reports and dashboards.

Dataflows are designed to help users solve the common data integration challenges they face when working with data from various sources. They offer a range of pre-built connectors to various data sources such as Dynamics 365, SharePoint, Salesforce, Excel, and more.

Dataflows offer several benefits, such as reducing data preparation time, ensuring data consistency and accuracy, and improving data governance and security. They also help users to create a single version of the truth by centralizing data preparation and management, which can lead to better decision-making and insights.

What is the difference between Power BI Dataflows and Power Query?

Power BI Dataflows and Power Query are both tools for data transformation and modeling within the Power BI ecosystem, but there are some key differences between the two.

- Power Query is a data transformation and cleansing tool that is used within Power BI Desktop and Excel. It is primarily designed to help users clean, transform, and shape data from a wide variety of sources, such as databases, spreadsheets, web pages, and other data sources. Power Query provides an intuitive user interface that allows users to visually build data transformation steps, and it uses a functional language called “M” to perform data transformations.

- Power BI Dataflows, on the other hand, are cloud-based ETL services that are used to prepare and transform data for use in Power BI reports and dashboards. They allow users to create reusable data transformation pipelines that can extract data from various sources, apply transformations to that data, and then load it into a destination where it can be consumed by Power BI.

- One of the main differences between Power Query and Power BI Dataflows is that Power Query is a desktop-based tool, while Dataflows are cloud-based. This means that Power Query can be used offline and provides more control over the data transformation process, while Dataflows offer the benefits of cloud-based processing, such as automatic scaling and maintenance.

- Another difference is that Power BI Dataflows are designed for more complex data transformation scenarios, where multiple data sources need to be combined and transformed in a repeatable and automated way. Dataflows also offer additional capabilities, such as data profiling and lineage tracking, which are not available in Power Query.

How do I create a Power BI Dataflow with Business Central data?

- Step 1: Create a Dataflow

- To create a dataflow, go to the Power BI Service, click on Create, and select Dataflow.

- Then select Define new tables.

- From there, you can select the Business Central data source.

- Note that dataflows aren’t available in my-workspace in the Power BI service. First you need to create a custom workspace, and that requires a Power BI Pro or Premium account.

- Next connect to Business Central. You can leave the Environment/Company/API blank if you want, but you have to sign-in with an account that has access to Business Central.

- Next Dataflows displays the Business Central Environments and Companies you have access to. Expand them, and then select the APIs and/or web services you want to connect to.

- To create a dataflow, go to the Power BI Service, click on Create, and select Dataflow.

- Step 2: Transform Your Data

- Next, you can transform your Business Central data. The Dataflow Editor provides a wide range of data transformation options, including filtering, merging, splitting, aggregating, and more.

You can use these transformations to clean and reshape your data to fit your business needs. - Then Close and Save your Dataflow.

- Next, you can transform your Business Central data. The Dataflow Editor provides a wide range of data transformation options, including filtering, merging, splitting, aggregating, and more.

- Step 3: Schedule Refreshes

- Power BI Dataflows allows you to schedule automatic refreshes of your data. You can set up a refresh schedule to ensure that your data is always up-to-date.

You can choose to refresh your data daily, weekly, or monthly, depending on your business needs.

- Dataflows can also be set to refresh incrementally. To do so, select the dataflow you wish to set up for incremental refresh, and then choose the Incremental Refresh icon.

- Power BI Dataflows allows you to schedule automatic refreshes of your data. You can set up a refresh schedule to ensure that your data is always up-to-date.

- Step 4: Use Your Data

- By putting your data into a dataflow you can use Power BI Desktop and the Power BI service to create datasets, reports, dashboards, and apps.

- To consume a dataflow, open Power BI Desktop and select Dataflows in the Get Data dropdown.

- Select the dataflow and tables to which you want to connect.

What are advantages of using Power BI Dataflows?

There are several advantages of using Power BI Dataflows in your business intelligence solution:

- Data integration: Power BI Dataflows provides a unified and standardized way to integrate data from various sources. This ensures that your data is consistent and accurate across all your reports and dashboards.

- Data transformation: With Power BI Dataflows, you can easily transform your data to fit your business needs. This includes filtering, merging, splitting, aggregating, and more. This allows you to create customized views of your data that provide insights into your business.

- Data cleansing: Power BI Dataflows provides tools for data cleansing, such as removing duplicates, correcting errors, and validating data. This helps ensure that your data is clean and accurate, which is crucial for making informed business decisions.

- Reusability: Once you create a dataflow, you can reuse it in multiple reports and dashboards. This saves time and effort, as you don’t have to recreate the same data transformations every time you create a new report or dashboard.

- Automation: Power BI Dataflows allows you to automate the data transformation and loading process. You can schedule automatic refreshes of your data, so your reports and dashboards are always up-to-date. This frees up time and resources, as you don’t have to manually refresh your data every time you want to view a report.

- Collaboration: Power BI Dataflows supports collaboration, allowing multiple users to work on the same dataflow. This makes it easy to share data across departments or with external stakeholders, such as customers or vendors.

Power BI Dataflows provides a powerful way to manage and transform your data, allowing you to create customized views of your data and gain valuable insights into your business.

For more information, have a look here:

- Introduction to dataflows and self-service data prep – Power BI | Microsoft Learn

- Creating a dataflow – Power BI | Microsoft Learn

- Configure and consume a dataflow – Power BI | Microsoft Learn

- Dataflows best practices – Power BI | Microsoft Learn

- Understanding and optimizing dataflows refresh – Power BI | Microsoft Learn

- Dataflows Limitations, restrictions and supported connectors and features – Power BI | Microsoft Learn

How cool is that?

Let’s ask ChatGPT:

Discover more from think about IT

Subscribe to get the latest posts sent to your email.

Hello,

very nice article. I was wondering if you are using Dataflow in your Power BI projects. Would you use dataflow for a database that is producing 3k sales orders every day and also a budget for sales is considered to be used for the dimensional reporting.

I was wondering also if it make sense for on-premises installations that it is better to connect to SQL database directly or better use SQL dedicated views with already predefined SQL statements in it.

Thanks,

Luke

Hi Luke,

It depends. I would never import all sales orders in a dataflow or a Power BI report.

Usually in Power BI you need to display and analyze aggregated data, at a certain level of granularity. In the BC tables there are a lot of ‘details’ (order lines) that need to be summarized in Power BI (or Excel), using for example dataflows.

So I would first create/develop a query, that selects the required lines and aggregates them, and them use that to import in a dataflow.

A customer can use BC to see the ‘details’ (sales orders and lines), and they can use Power BI (or Excel) to see the aggregated data. Individual orders (or lines), imho, do not belong in a BI report.

At the moment that performance or the dataflow limitations would become an issue, then I indeed suggest to look in other technologies, like building a DWH.

I also so not recommend to connect directly to the SQL db, for on-prem installations. Connecting to SQL will be faster, you can create views, stored procedures,… but that kind of solution will not be BC-Saas compatible. And when the customer decides later to move to BC-Saas, you have to then develop another solution. Best is, also for on prem, to try to work with APIs.

However, another option is to work with incremental refresh in the dataflows. That way only the records that were changed since the last refresh are loaded.

I fully agree with the article’s explanation of Power BI Dataflows. Microsoft’s cloud-based ETL service provides a convenient solution for creating and managing data pipelines, enabling easy extraction, transformation, and loading of data into Power BI reports and dashboards. The pre-built connectors and various benefits, such as time-saving data preparation, data consistency, and improved governance, make Dataflows a powerful tool for efficient data management and decision-making.

Hi,

has anyone checked if the incremental refresh with data from BC-APIs is actually working?

For one thing, I am getting a warning from Power BI which tells me that it could not be determined, if query folding is happening for the query. I have tried to research this topic before and was unable to determine if query folding is taking place when calling BC-APIs. My guess is that it is not. The only case in which I can get my M-Queries to fold is when importing data from SQL Server. Either directly from BC or from a Data Warehouse. In both cases, the incremental load is working fine.

The second thig is that the refresh of a large table which I am importing from BC SaaS does not seem to have sped up. It is still transferring which seems to be the whole table. If anything, the query seems to have gotten a bit slower.

Any input / experiences on this would be much appreciated.

Cheers!